Update 18 December 2023 — This morning the European Commission launched formal proceedings against Twitter / X for suspected infringements of the EU Digital Services Act. It will now investigate whether the Blue Tick deceives users, whether content that breaks the law is spread by the platform, and whether the platform is unlawfully non-transparent.

This investigation is welcome. However, the Irish Council for Civil Liberties (ICCL) urges urgent action against toxic algorithms on Twitter / X and other Big Tech platforms – the European Commission should learn from the example of Coimisiún na Meán, Ireland’s new broadcasting and online regulator.

14 December 2023 — ICCL calls on the European Commission to switch off Big Tech’s toxic algorithms. Coimisiún na Meán leading Europe by example, taking on video platforms.

The Irish Council for Civil Liberties (ICCL) has sent the European Commission a report urging it to follow Coimisiún na Meán’s example and switch off Big Tech’s toxic algorithms cross the European Union.

The call comes in the wake of a major step taken by Coimisiún na Meán, Ireland’s new broadcasting and online regulator. In its draft rules for video platforms like YouTube and TikTok, the new watchdog has ordered them to stop building intimate profiles about our children – or any person whose age is unproven – in order to then manipulate them for profit by artificially amplifying hate, hysteria, suicide and disinformation in their personalised feeds.

Speaking today, Dr Johnny Ryan, a Senior Fellow of ICCL, said:

“Coimisiún na Meán is leading the world by forcing Big Tech to turn off its toxic algorithms. People – not Big Tech’s algorithms – should decide what they see and share online. The European Commission should learn from Coimisiún na Meán’s example, and give everyone in Europe the freedom to decide.”

Algorithmic “recommender systems” select emotive and extreme content and show it to people who the system estimates are most likely to be outraged. These outraged people then spend longer on the platform, which allows the company to make more money showing them ads.

These systems are acutely dangerous. Just one hour after Amnesty’s researchers started a TikTok account posing as a 13-year-old child who views mental health content, TikTok’s algorithm started to show the child videos glamourising suicide.[1]

They also amplify extremism. Meta’s own internal research reported that “64% of all extremist group joins are due to our recommendation tools… Our recommendation systems grow the problem”.[2] Without algorithmic amplification, material from tiny extremist groups would never be widely seen.

The European Commission recently reported that Big Tech’s recommender systems aided Russian’s disinformation campaign about its invasion of Ukraine.[3]

Digital platforms have a very poor record of self-improvement and responsible behaviour. After years of scandals and purported fixes by YouTube, nearly three quarters of the problematic[4] content seen by 37,000+ test volunteers on YouTube in 2022 was due to the YouTube’s recommender system amplifying it.[5]

Dr Ryan concluded:

“Social media was supposed to bring us together. Instead, it tears us apart. Europe needs a rapid response to this problem, and Coimisiún na Meán has shown the way forward.”

Ends

Note for editors

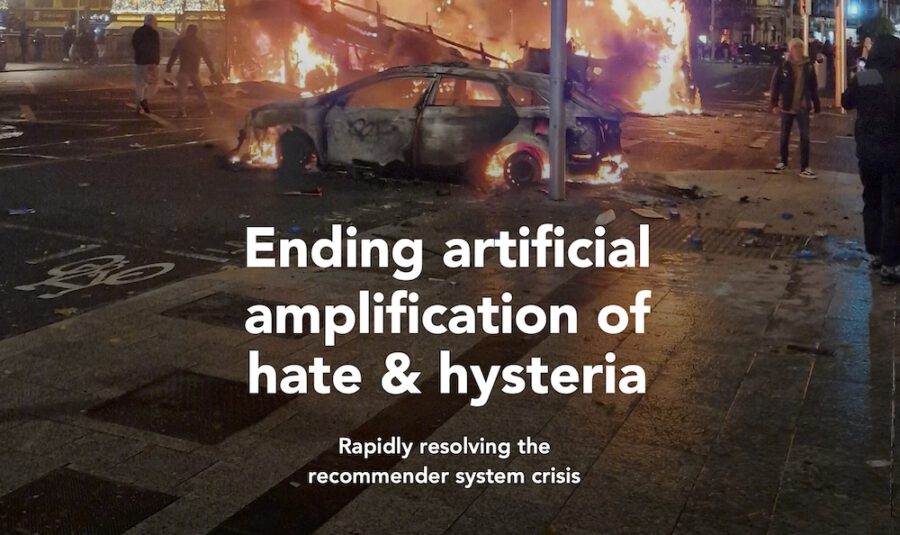

Report from ICCL to European Commission “Ending artificial amplification of hate and hysteria” is available at https://www.iccl.ie/wp-content/uploads/2023/12/Ending-artificail-amplification-of-hate-and-hysteria.pdf

European Commission statement on formal proceedings against Twitter / X https://ec.europa.eu/commission/presscorner/detail/en/ip_23_6709

Notes

[1] https://www.amnesty.org/en/latest/news/2023/11/tiktok-risks-pushing-children-towards-harmful-content/.

[2] “Facebook Executives Shut Down Efforts to Make the Site Less Divisive”, Wall St. Journal, 26 May 2020 (URL: https://www.wsj.com/articles/facebook-knows-it-encourages-division-top-executives-nixed-solutions-11590507499). This internal research in 2016 was confirmed again in 2019.

[3] “Digital Services Act: Application of the Risk Management Framework to Russian disinformation campaigns”, European Commission, 30 August 2023 (URL: https://op.europa.eu/en/publication-detail/-/publication/c1d645d0-42f5-11ee-a8b8-01aa75ed71a1/language-en), p. 64.

[4] “YouTube Regrets: A crowdsourced investigation into YouTube’s recommendation algorithm”, Mozilla, July 2021 (URL: https://assets.mofoprod.net/network/documents/Mozilla_YouTube_Regrets_Report.pdf), pp 9-13.

[5] ibid. p. 17.

Media queries:

- Ruth McCourt, ruth.mccourt@iccl.ie / 087 415 7162

Available for interview:

- Dr Johnny Ryan, Senior Fellow, ICCL

ENDS